With the explotion of generativ AI technology, many organizations are now starting to look at how they can benefit from this new opprtunities. While a lot of services like Copilot and ChatGPT are available, for many organizations the need to run your own AI infrastructure is required.

Why? Because AI makes value when you connect it to your own data. This data is available in your existing digital platforms, on-premises or/and in the cloud(s). There are two concerns here. The performance and the security. Performance, because to make AI to be fast and useful, it needs high performance, low latency access two your data. Security, you need to be compliant with regulations, and be sure that AI services does not leak data to external organizations.

Traditional IT architecture has handled this for years for non- AI applications. Now, we need extend this capacity to also handle AI workloads. This is what Enterprise AI infrastructure is about. So what is Enterprise AI infrastructure? There are two distinct features that is required that you may not already have. One is GPU nodes, the other is container infrastructure.

GPU is the computing engine that is required to train and use AI inference models like ChatGPT etc. GPU is traditionally a graphics processing unit, today these are also used for AI and machine learning, because they are extremely effective for paralell processing. CPU is mostly user for traditional applications, they can be considered serial processors. We need both in a modern infrastructure, to support different types of applications.

Containers is a modern scalable way of deploying applications, and this is slowly replacing virtual servers. AI models are normally deployed as containers.

But an AI platform is a lot more, because you want to be able to experiment with different inference models and you need to be able to run models at workloads that has different performance requirements. Lately I’ve been looking into NVIDIA Enterprise AI platform and Nutanix ChatGPT in abox.

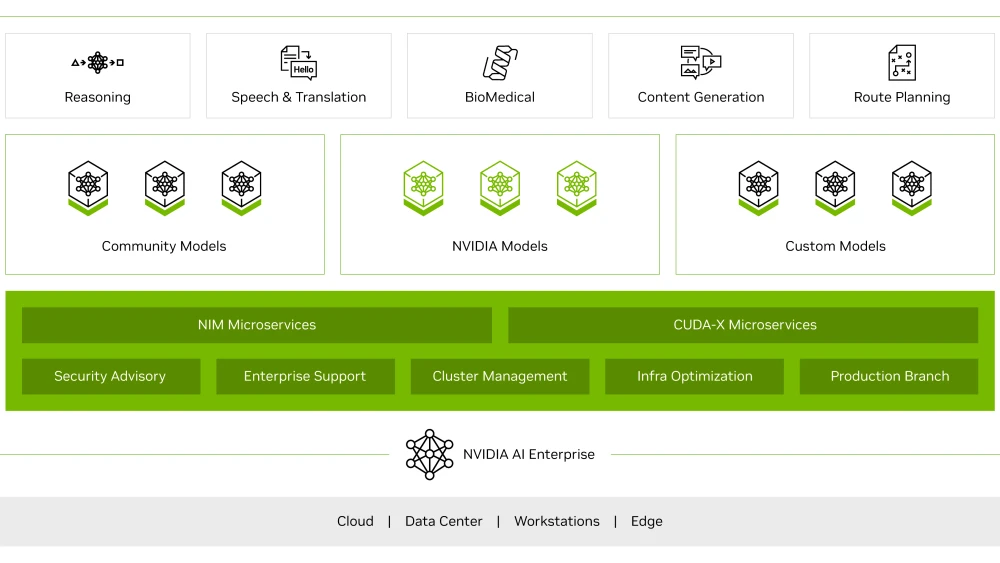

Nvidia Enterprise AI, is a platform that provides the hardware (GPU), manages the resources from GPU sharing to multiple GPU clusters working together as one. The architecture underneath is familiar if you have worked with vGPU for 3D VDI, but it is a lot more.

It also provides microservices with ready to user inference models, (NIM) that allows you to pull down different generative AI models an run on your own infrastructure, Nvidia is optimizing an updating these containers. Further Nvidia has a lot of AI ready workflows, industry specific “ready to use” solutions and tools around developing and building your own AI workloads effectively. It is a whole ecosystem of AI solutions for different purposed. It is licensed per GPU on premises or per hour for cloud workloads.

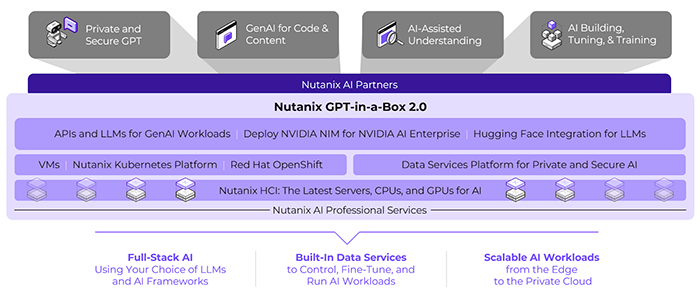

Nutanix is certified for running Nvidia NIM, and provide a ready to use “GPT in a box” solution, to make the process of building, operating and scalining AI infrastructure, fast, easy and reliable. If you have worked with Nutanix in the past, you know how good they are at exactly this for datacenter purposes. Now with their GPT in a box and Nutanix Kubernetes Service, it is easy to build your own scalable infrastructure for modern workloads like AI and traditional IT applications.

If you want to innovate with AI, you need to have a secure scalable digital platform that allows you to experiement with different AI models on your own data. At current state, it is difficult to know where AI can provide value for your organization, and the release of new models is extremely fast. I belive most organiation will not need to train their own models, but use existing models and optimize for your own purpose. This is what Enterprise AI is about. It puts the ability to be agile and innovative in your hands without going through all the complexity.

Leave a comment